Reinforcement Learning from Human Feedback (RLHF) has emerged as the cornerstone technique for aligning Large Language Models (LLMs) like ChatGPT and Claude with human values, instructions, and safety standards. Moving beyond simply predicting the next word, RLHF trains models to be genuinely helpful, harmless, and honest (HHH).

Reinforcement Learning from Human Feedback (RLHF) has emerged as the cornerstone technique for aligning Large Language Models (LLMs) like ChatGPT and Claude with human values, instructions, and safety standards. Moving beyond simply predicting the next word, RLHF trains models to be genuinely helpful, harmless, and honest (HHH).

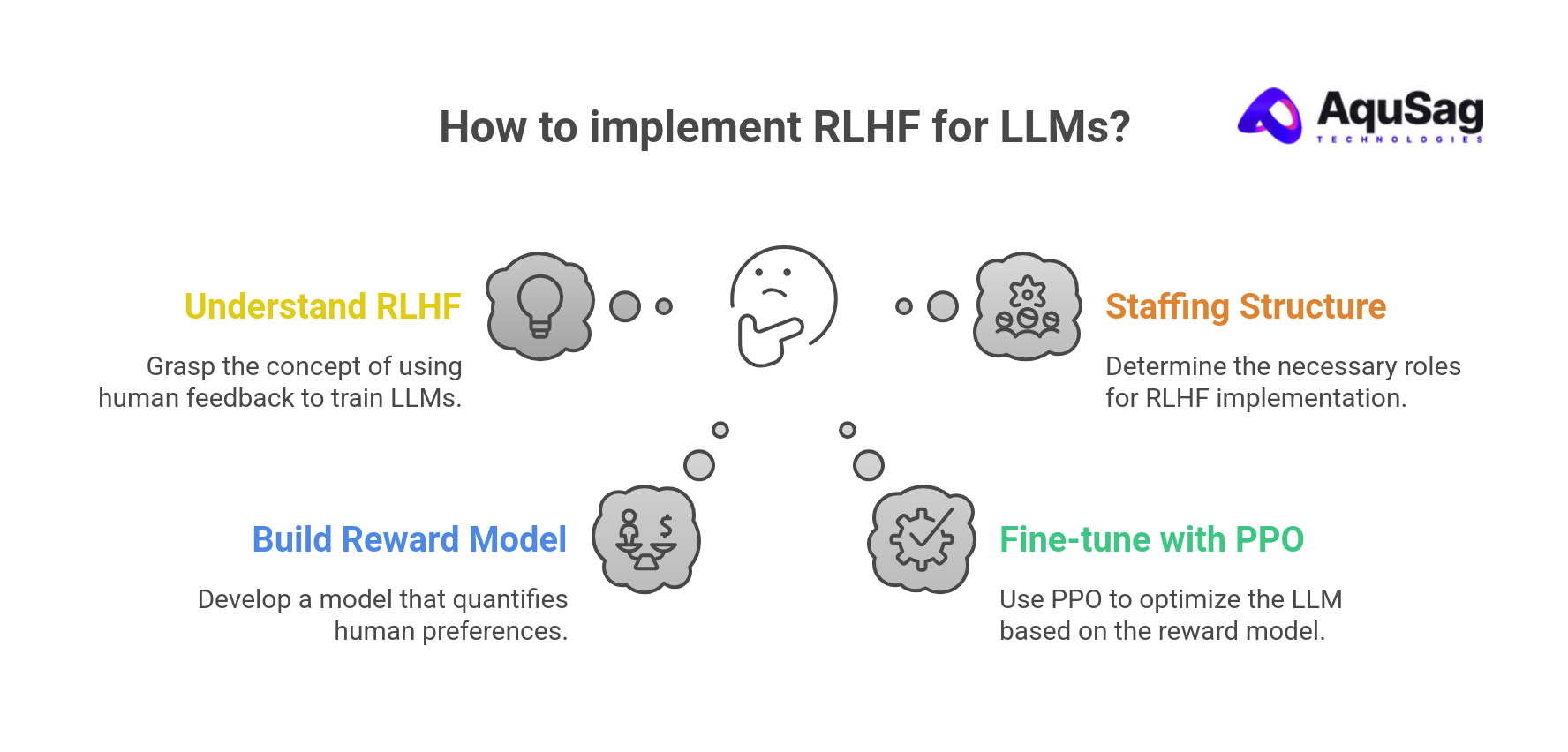

For organizations looking to build state-of-the-art generative AI, mastering the RLHF pipeline is non-negotiable. This detailed guide breaks down the complex workflow, reveals the critical staffing structure, and outlines the best practices for success.

What is Reinforcement Learning from Human Feedback (RLHF) in Simple Terms?

RLHF is a machine learning process that uses human preference data to create a Reward Model (RM). This RM then acts as a surrogate for human judgment, providing a quantifiable 'score' (a reward) to guide the final fine-tuning of the LLM using a reinforcement learning algorithm like Proximal Policy Optimization (PPO).

Think of it like training a dog: instead of programming every action, you give the dog a reward (a treat) when it performs a desired behavior. RLHF does the same for an LLM, it teaches the model to maximize the 'human preference reward' it receives.

What are the Core Stages of the RLHF Workflow?

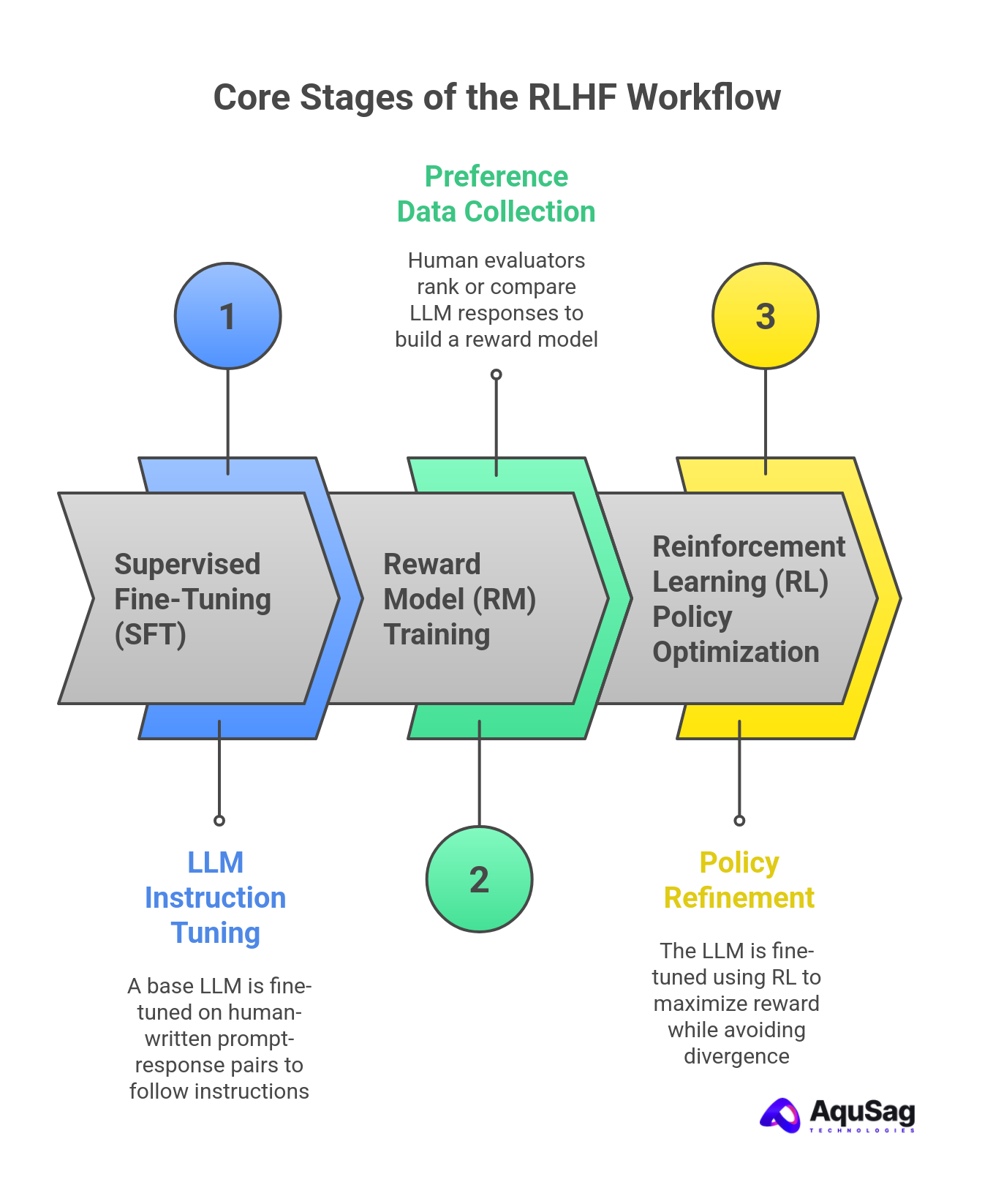

The RLHF process is typically executed in three major stages following the initial training of a foundational LLM:

1. Supervised Fine-Tuning (SFT)

- Goal: To bootstrap the LLM to follow instructions and generate high-quality, relevant responses.

- Process: A small, high-quality dataset of human-written prompt-response pairs is used to fine-tune the base LLM. This prepares the model for the more nuanced alignment of the later RLHF stages. This is often the step that yields the most significant initial lift in performance.

2. Reward Model (RM) Training and Human Preference Data Collection

- Goal: To build a scalar-output model that can accurately predict human preference.

- Data Collection: This is the most crucial human-in-the-loop step. Human evaluators (often called Annotators or Raters) are presented with a prompt and several different responses generated by the SFT model. The human task is typically to rank or compare these responses based on criteria like relevance, helpfulness, safety, and factuality.

- The preference data format is often a set of pairwise comparisons (e.g., "Response A is better than Response B").

- RM Training: The collected preference data is used to train a separate language model, the Reward Model. This model learns to output a high score (reward) for the preferred response and a low score for the rejected response.

3. Reinforcement Learning (RL) Policy Optimization

- Goal: To fine-tune the LLM (the Policy) to generate responses that maximize the reward predicted by the RM.

- Process: An RL algorithm, most commonly PPO, is used. The LLM generates a response, the Reward Model scores it, and the PPO algorithm updates the LLM’s weights to increase the likelihood of receiving higher rewards in the future.

- Crucial Component: KL Divergence Penalty: A penalty is typically applied during this step to prevent the fine-tuned model from deviating too far from the original SFT model. This prevents the LLM from engaging in "reward hacking" (finding ways to maximize the RM score without actually producing high-quality, aligned outputs).

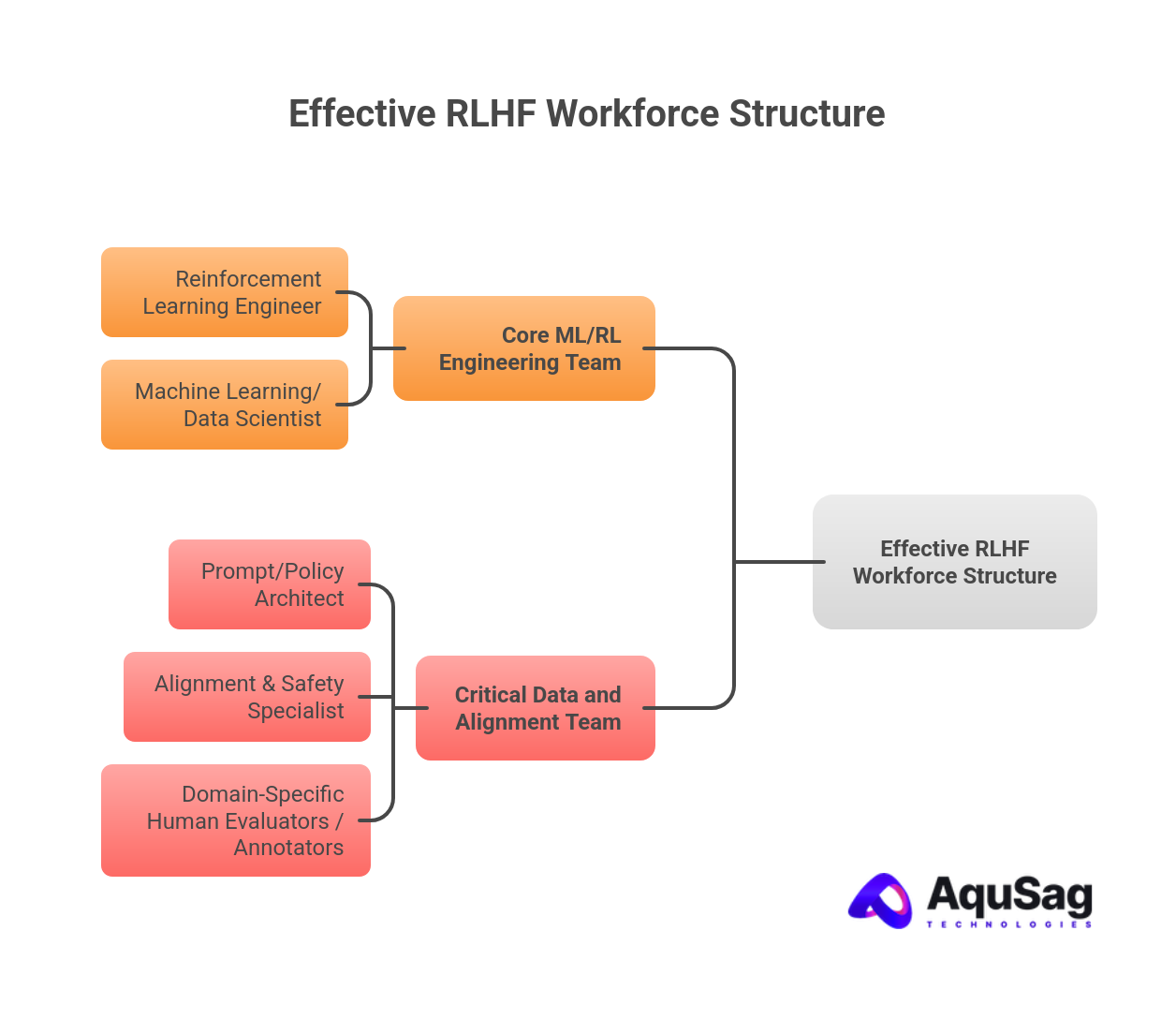

How is an Effective RLHF Workforce Structured?

Building a successful RLHF pipeline requires a highly specialized, multidisciplinary team. The following roles are essential for any organization tackling this challenge, either with in-house teams or by partnering with a specialized RLHF staffing vendor like aqusag.com.

1. The Core ML/RL Engineering Team

- Reinforcement Learning Engineer:

- Responsibilities: Implementing and optimizing the RL algorithms (PPO, DPO, etc.), managing the large-scale distributed training infrastructure, and handling the complex interaction between the LLM and the Reward Model.

- Search Query Relevance: RLHF Engineer, PPO Optimization, RL Algorithms.

- Machine Learning/Data Scientist:

- Responsibilities: Designing, training, and evaluating the Reward Model (RM); analyzing preference data distribution, identifying biases, and implementing robustness techniques to ensure the RM is a reliable proxy for human values.

- Search Query Relevance: Reward Model Training, Preference Dataset Analysis.

2. The Critical Data and Alignment Team (The "Human" in RLHF)

- Prompt/Policy Architect:

- Responsibilities: Designing the initial SFT dataset, crafting the prompts for human labeling, and strategically optimizing the prompt distributions over time to cover diverse and challenging use cases.

- Search Query Relevance: Prompt Engineering, LLM Alignment Strategy.

- Alignment & Safety Specialist:

- Responsibilities: Defining the explicit, clear, and comprehensive labeling guidelines (rubrics) for HHH criteria; auditing model outputs for safety, bias, and factual correctness; and ensuring the model adheres to corporate and ethical standards.

- Search Query Relevance: AI Safety Rater, LLM Alignment Expert.

- Domain-Specific Human Evaluators / Annotators:

- Responsibilities: Providing the raw human preference data by accurately and consistently ranking model outputs according to the established rubrics. This is the RLHF workforce backbone. This is often outsourced to a high-quality reward model labeling vendor like aqusag.com for scale and domain expertise.

- Search Query Relevance: Reward Model Labeling Vendor, RLHF Workforce, Human Preference Annotation.

Hiring Tip: For highly specialized LLMs (e.g., LegalTech, FinTech, Medical AI), the Annotators must have relevant domain expertise. aqusag.com specializes in sourcing and managing highly-vetted, domain-expert RLHF teams to ensure the feedback is not just plentiful, but expert-level.

Featured Blog Post: 5 Signs You Need to Scale Your Development Team

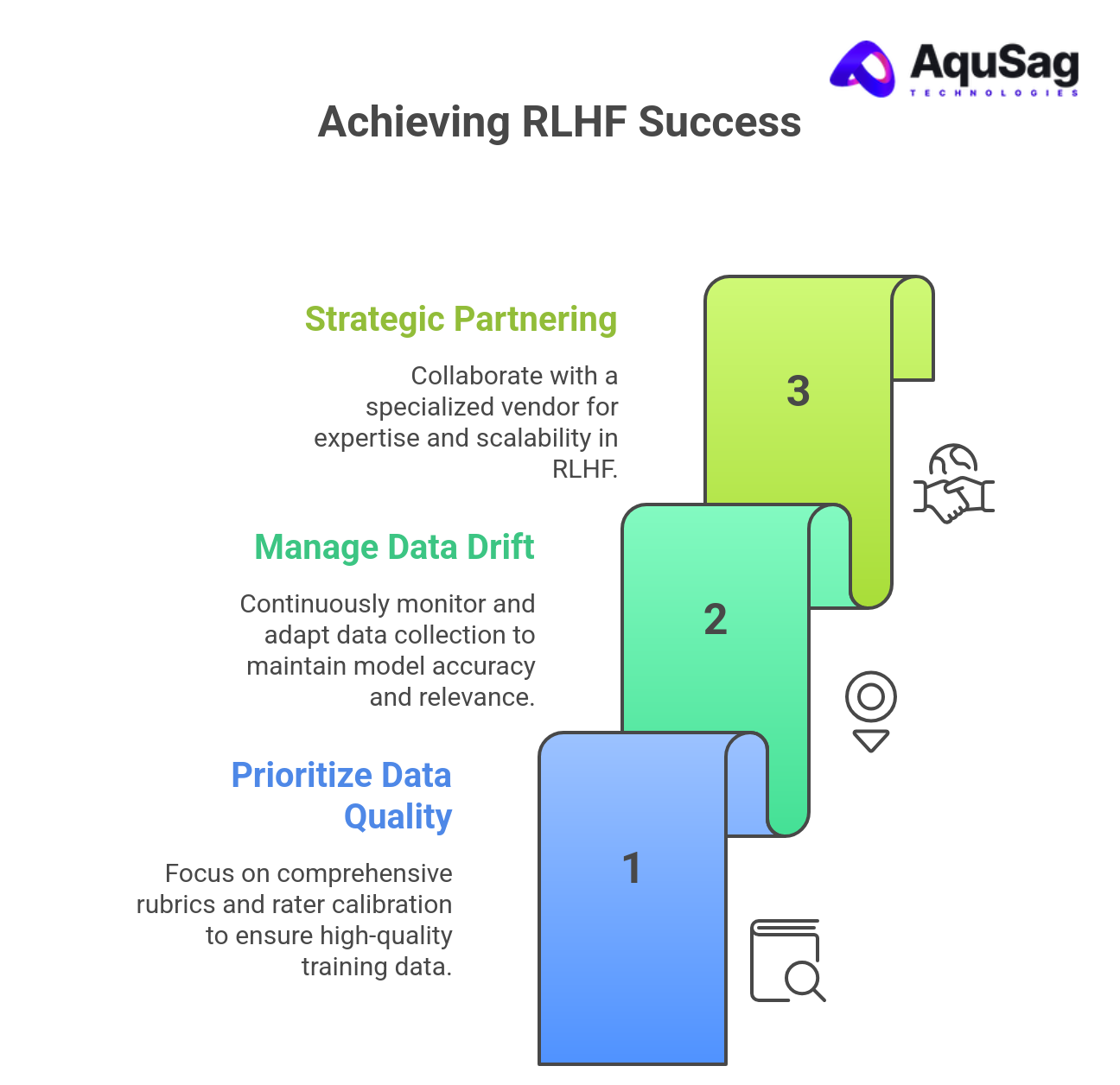

What are the Best Practices for RLHF Success?

Successfully implementing RLHF requires more than just technical expertise; it demands a structured approach to data quality, workflow management, and vendor partnership.

1. Prioritize Data Quality Over Quantity

The RM is only as good as the human data it’s trained on.

- Comprehensive Rubrics: Create detailed, unambiguous labeling guidelines that explicitly define what constitutes a "good" versus a "bad" response for your specific application.

- Rater Calibration and Agreement: Continuously measure inter-rater agreement (IRA). Low IRA indicates flawed rubrics or a need for better training and calibration of your RLHF workforce.

- Diversity in Prompts and Raters: Ensure the prompts cover a wide range of topics and difficulties, and your rater pool is diverse to mitigate the amplification of singular biases.

2. Manage Data and Model Drift

LLMs are dynamic, and so are human expectations.

- Drift Detection: Continuously monitor the LLM's new outputs and the Reward Model's confidence scores. If the model starts producing novel, out-of-distribution responses, the RM might become inaccurate (Reward Model Drift).

- Adaptive Data Collection: Focus new human labeling efforts on examples where the RM is least certain or where human-model disagreement is highest. This is known as Active Learning and is a powerful strategy to maximize the value of expensive human feedback.

3. Strategic Partnering with a Reward Model Labeling Vendor

For rapid scaling and guaranteed quality, most organizations rely on a specialist.

- Vendor Expertise: Partner with a vendor like aqusag.com that offers not just raw labeling capacity but also expertise in RLHF team structure, quality assurance, and custom reward model labeling for complex, sensitive domains.

- Scalability: A top vendor can rapidly scale up or down the RLHF workforce to meet iterative development needs without compromising the consistency of the feedback.

Frequently Asked Questions (FAQs)

What is the most expensive part of the RLHF process?

The most expensive part of RLHF is often the collection of high-quality human preference data and the subsequent management of the RLHF workforce. Human labeling is time-consuming and requires specialized, high-wage workers, especially for domain-specific models. Strategic partnering with a reward model labeling vendor like aqusag.com can optimize this cost.

What is the difference between SFT and RLHF?

Supervised Fine-Tuning (SFT) trains the LLM using direct examples of desired outputs (prompt/response pairs). RLHF is the subsequent stage that uses human ranking/comparison to train a Reward Model, which then guides the LLM to align with nuanced human preferences and values beyond simple correctness.

What is a Reward Model Labeling Vendor?

A Reward Model Labeling Vendor (like aqusag.com) provides the specialized RLHF workforce—the human annotators or raters—who compare and rank LLM outputs. This preference data is then used to train the Reward Model. The vendor manages the quality, scale, and domain expertise of these evaluators.

How do I structure my RLHF team?

A basic RLHF team structure includes an RL Engineer, an ML Scientist for the Reward Model, a Prompt Architect, and a dedicated team of Human Evaluators. For rapid deployment, many companies leverage external RLHF workforce providers to handle the data collection and annotation at scale.

What specific roles make up the RLHF workforce?

The RLHF workforce primarily consists of highly-trained Annotators, Raters, or Human Evaluators. These roles require strong analytical skills, attention to detail, and often domain-specific knowledge to consistently apply complex alignment rubrics.

Why is inter-rater agreement important in RLHF?

Inter-rater agreement (IRA) measures how consistently different human evaluators apply the same labeling guidelines. High IRA is critical because it indicates that the human preference data is consistent, making the Reward Model more reliable and less susceptible to learning noise or individual biases.