Why scaling RLHF is hard

Why scaling RLHF is hard

Large language model training is resource‑intensive because it combines massive GPU compute, large curated datasets, and complex post‑training steps like supervised fine‑tuning and RLHF on top of already expensive pretraining runs. Even after the base model is trained, teams must repeatedly generate responses, collect human preferences, update reward models, and run policy optimization, which quickly drives up costs and latency if the pipeline is not engineered well.

What makes LLM training resource‑intensive

LLM training consumes significant GPU memory and compute for generation, reward modeling, and gradient updates, with each stage having different parallelization and batch size requirements that limit hardware utilization. In addition, high‑quality data curation, labeling, and evaluation require skilled human reviewers, which often become the bottleneck long before GPUs are fully saturated.

Why RLHF needs elite human reviewers

RLHF performance depends heavily on consistent, well‑calibrated human raters who understand model behavior, safety policies, and subtle preference trade‑offs rather than generic crowd workers. Poorly trained or inconsistent reviewers introduce noisy labels that weaken the reward model, reduce alignment quality, and can make extra compute and bigger models deliver diminishing returns.

How offshore RLHF teams reduce iteration time

Offshore RLHF teams in India can provide dense coverage across time zones, enabling continuous data collection, labeling, and evaluation so product teams in the US or Europe see aligned model updates every sprint instead of every quarter. By leveraging lower operational costs and deep English‑proficient technical talent in India, companies can scale from a handful of in‑house reviewers to dozens of specialized LLM trainers without exploding budgets.

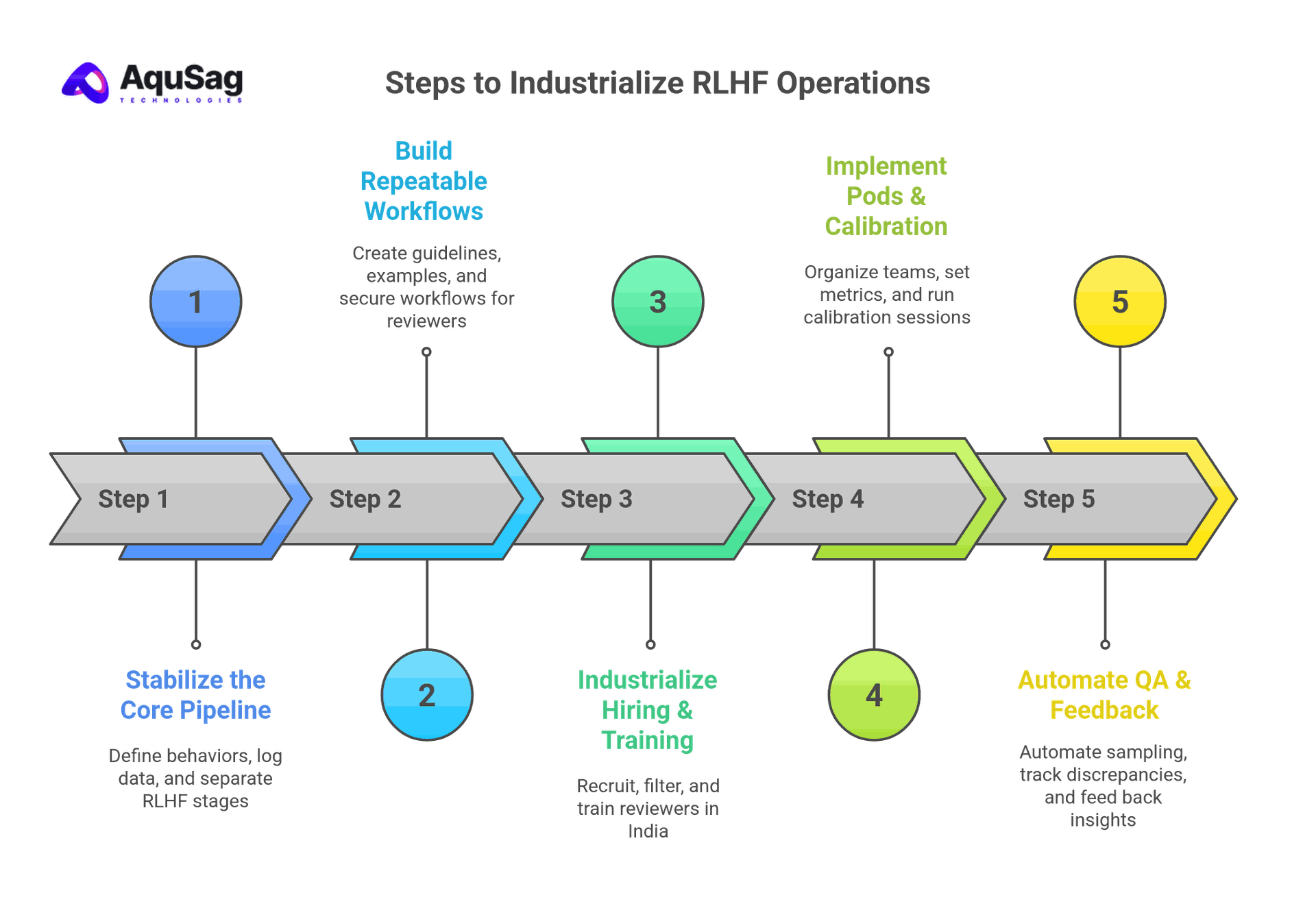

Framework to scale from 3 to 50 trainers

A practical way to scale an RLHF operation is to start with a core team of 3–5 senior reviewers who lock down guidelines, edge cases, and quality bars before expanding to a pod‑based structure with leads supervising 5–8 junior trainers each. Once processes and rubrics are stable, you can ramp to 30–50 trainers by standardizing onboarding, building calibration tests, and using QA sampling at each pod level so quality stays consistent as headcount grows.

Featured Blog Post: The Ultimate Guide to Dedicated Hiring in 2025

Step 1: Stabilize the core RLHF pipeline

Begin by defining your target behaviors, safety constraints, and evaluation criteria, then instrument your system so prompts, model outputs, and decisions are logged and traceable through the RLHF loop. Ensure clear separation between data collection, reward modeling, and policy optimization so each stage can be measured and improved independently as the team scales.

Step 2: Build repeatable reviewer workflows

Create detailed labeling guidelines, golden examples, and decision trees so multiple reviewers can make consistent judgments on toxicity, helpfulness, hallucinations, and policy violations. Implement structured workflows, such as ranking multiple model responses, tagging failure modes, and giving structured rationales, that can be executed in tools your vendors and offshore teams can access securely.

Step 3: Industrialize hiring and training in India

Use a vendor or local partner in India experienced in RLHF and data labeling to recruit English‑fluent reviewers with STEM or domain backgrounds and filter them using scenario‑based tests that mirror your production prompts. Run a structured training program with sandbox tasks, shadowing, and calibration quizzes so new LLM trainers can reach production‑ready quality in weeks instead of months.

Step 4: Implement pods, leads, and calibration

Organize teams into pods where one experienced lead manages 5–8 RLHF trainers, reviews edge cases, and runs weekly calibration sessions using new, tricky prompts. Set quantitative thresholds for agreement rates and error rates at both individual and pod level, and use these metrics to decide when to add more headcount or pause scaling to fix guidelines.

Step 5: Automate QA and feedback loops

Introduce automatic sampling of completed tasks for secondary review, and track discrepancies to identify reviewers or pods that are drifting from the expected standard. Feed aggregated QA findings back into guidelines, training content, and reward model design so every iteration improves both human and model performance.

Featured Blog Post: How to Build a High-Performing Offshore Software Development Team

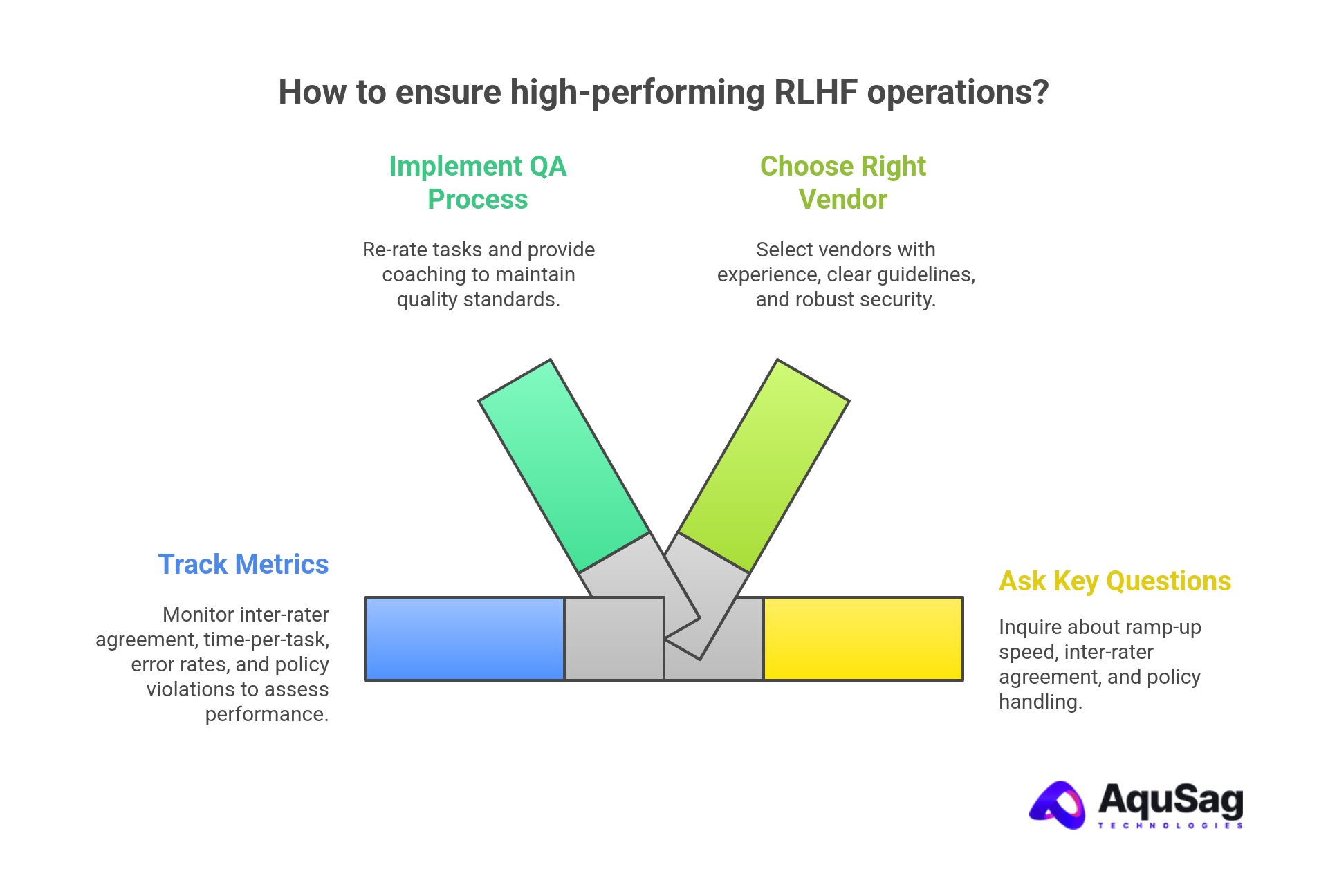

Example output metrics and QA process

High‑performing RLHF operations track metrics like inter‑rater agreement, time‑per‑task, error rates on golden datasets, and the number of policy violations caught before deployment. On the model side, teams monitor alignment metrics such as harmful output rates, helpfulness scores, and win‑rates against baseline models in A/B tests to decide when a new RLHF run is safe to ship.

Sample QA workflow for RLHF teams

A common QA process is to have 10–20% of completed tasks re‑rated by a senior reviewer or a small expert panel, comparing decisions against golden labels or consensus to detect drift. Reviewers whose decisions fall below thresholds can receive targeted coaching, temporary restrictions to lower‑impact tasks, or re‑certification exercises to bring them back to standard.

Choosing the right RLHF vendor

When selecting an RLHF vendor or offshore LLM training partner, prioritize those who can show proven experience with safety‑sensitive domains, clear guidelines, and documented calibration processes rather than just headcount. Look for transparent quality metrics, the ability to ramp from small pilots to 50+ trainers, robust data security practices, and willingness to adapt workflows to your internal tooling and research roadmap.

Key vendor evaluation questions

Useful questions include: how quickly can the vendor ramp from 3 to 30 RLHF trainers, what their typical inter‑rater agreement looks like on complex prompts, and how they handle policy updates at scale. It is also important to ask about their experience working with US and European AI labs, their reviewer background mix, and whether they support 24/7 coverage across time zones.

FAQs on scaling RLHF and LLM training

What is RLHF in LLMs?

Reinforcement learning from human feedback (RLHF) is a post‑training technique where human raters compare model outputs, train a reward model on their preferences, and then use reinforcement learning algorithms to optimize the base model toward those preferences. It is widely used to make LLMs safer, more helpful, and better aligned with human expectations than supervised fine‑tuning alone.

Why should RLHF teams be based in India?

India offers a large pool of English‑proficient, technically trained professionals who can be upskilled into specialized RLHF roles at a lower cost than many Western markets, enabling larger reviewer teams within the same budget. In addition, Indian RLHF vendors are increasingly experienced with global AI labs, security standards, and complex domain requirements, making them strong partners for scaling human‑in‑the‑loop pipelines.

How many RLHF reviewers do I need?

The number of reviewers depends on model size, product scope, and iteration speed, but many teams start with a small core group and then expand to 20–50 trainers as they move from research to production deployment. As the operation grows, investing in better processes, tooling, and QA usually yields more benefit than simply adding more reviewers without structure.

How do I keep RLHF quality high at scale?

Maintaining quality at scale requires clear guidelines, golden datasets, regular calibration sessions, and quantitative QA metrics like agreement rates and error rates. Combining these with structured pods, strong leads, and periodic retraining of reviewers helps keep both human and model behavior aligned as the team grows.

How fast can an offshore RLHF team ramp up?

Specialized RLHF vendors in India can often ramp from a pilot team to dozens of trained reviewers in a matter of weeks, especially when they maintain pre‑vetted talent pools and standardized onboarding programs. The exact ramp‑up time depends on domain complexity, security reviews, and how quickly your internal team can finalize guidelines and tooling access.

What should I look for in an RLHF vendor?

Look for vendors who provide measurable quality metrics, transparent processes, secure infrastructure, and experience with LLM alignment projects similar to yours rather than generic annotation work. A strong RLHF vendor should be able to support experiments, rapid iteration, and long‑term production alignment without slowing down your product delivery roadmap.