Enterprises around the world are racing to adopt large language models and generative AI not as experimental tools, but as integral components of their product and operational strategies. These organizations are under pressure to launch new AI-powered features, build agentic systems, process massive amounts of unstructured data, personalize customer experiences, and respond to the changing expectations of modern users.

Enterprises around the world are racing to adopt large language models and generative AI not as experimental tools, but as integral components of their product and operational strategies. These organizations are under pressure to launch new AI-powered features, build agentic systems, process massive amounts of unstructured data, personalize customer experiences, and respond to the changing expectations of modern users.

But the reality behind the scenes is far more complex. RLHF workflows, LLM evaluation cycles, model fine-tuning, instruction generation, domain-specific data preparation, and AI application engineering all demand specialized expertise that most enterprises simply do not have in-house. Even well-established organizations with strong engineering teams struggle to assemble skilled contributors fast enough to support AI delivery at the speed the business requires.

This mismatch between business urgency and internal capability raises a fundamental question that nearly every company implementing AI eventually confronts: How do we build end-to-end LLM, RLHF, and GenAI delivery pipelines without needing to hire and manage large specialized internal teams?

This article explores the answer in depth, showing how a modern enterprise can design, launch, and scale sophisticated AI pipelines by partnering with the right external workforce and operational structures,without expanding its permanent headcount.

Why is building internal AI delivery capability so difficult for enterprises?

Enterprises are not failing because they lack resources. They are failing because AI delivery requires a very different set of operational rhythms and staffing dynamics than traditional software initiatives.

An LLM is not a static system. It requires continuous evaluation, retraining, and refinement. RLHF workflows depend on contributors who understand instructions, context, safety rules, hallucination detection, scoring frameworks, and domain-specific nuance. Data annotation pipelines must be consistent, structured, and repeatable at large scale. Engineering support for GenAI applications requires Python developers familiar with embeddings, vector stores, prompting techniques, and agentic orchestration.

None of this fits neatly inside traditional hiring structures. Even companies with large engineering teams do not maintain dozens or hundreds of LLM Trainers, RLHF Annotators, Evaluation Specialists, and domain-trained Data Annotators on permanent payroll. They are not designed to scale Python developers for short-term AI workloads, nor can they shift engineers weekly based on model lifecycle demands.

The problem is not talent scarcity alone; it is structural incompatibility. Enterprises are built for stability, not volatility. AI workloads, on the other hand, are inherently volatile. They spike and drop constantly as models evolve.

This is why enterprises increasingly seek a delivery model that externalizes the complexity while maintaining full control over quality and outcomes.

Related Blog Post: The Complete Guide to RLHF for Modern LLMs

What makes RLHF and LLM delivery workflows uniquely resource-intensive?

RLHF (Reinforcement Learning from Human Feedback) is not a single activity. It is a multi-layered pipeline that includes instruction generation, preference ranking, response evaluation, safety review, and continuous scoring. Each component requires trained contributors who can understand model behavior, identify hallucinations, apply style constraints, and follow intricate rubrics.

Similarly, LLM fine-tuning pipelines require massive amounts of high-quality, domain-aligned training data, created through consistent instructional patterns. LLM evaluation workflows involve structured scoring, comparative analysis, rubric-driven judgment, and error classification. GenAI engineering work requires supporting Python developers who build retrieval flows, agent functions, orchestration layers, and testing frameworks.

An enterprise cannot expect internal engineers,even highly skilled ones,to suddenly possess expertise in RLHF, annotation, prompt evaluation, or GenAI application scaffolding. These tasks require different mindsets, different training, and different operational systems.

Without specialized teams, RLHF and LLM pipelines fail for predictable reasons: lack of consistency, slow turnaround, incomplete coverage of workflows, inadequate evaluation quality, and inability to scale to meet model iteration demands.

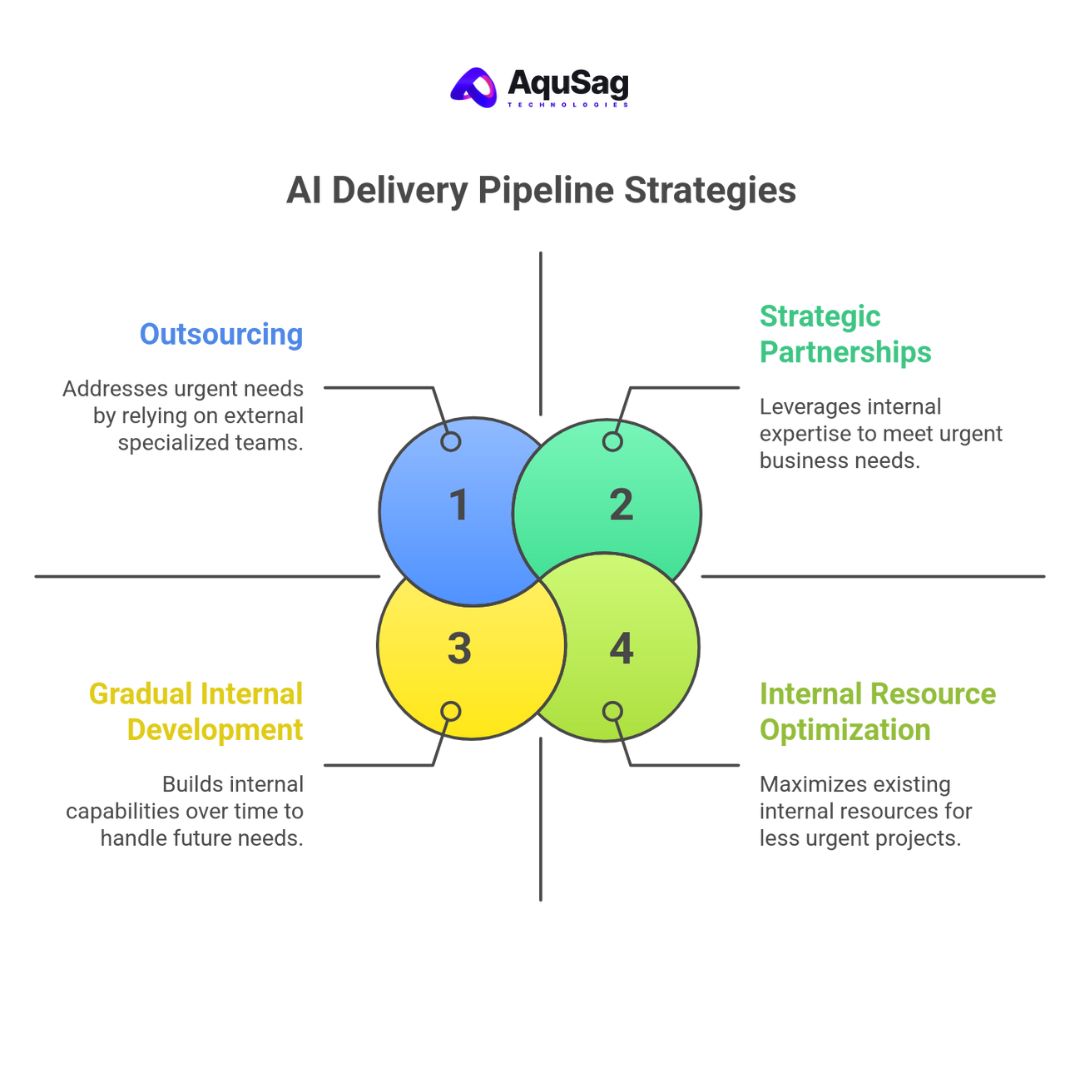

Why do enterprises ultimately choose external AI workforce augmentation instead of internal hiring?

The answer lies in three unavoidable realities: speed, specialization, and elasticity.

Speed

LLM and RLHF projects rarely operate on long timelines. Enterprises transitioning into AI often need to begin production work immediately,to meet strategic deadlines, competitive pressures, or internal product expectations. Internal hiring cannot match the pace required to operationalize these pipelines within days or weeks.

Specialization

Even companies with large engineering teams rarely have contributors trained to produce RLHF data, classify model behavior, or run evaluation frameworks. They also lack ready-to-deploy Data Annotators with domain knowledge or Python developers trained specifically for AI workflows. The breadth of required skillsets is too large for any internal team to build quickly.

Elasticity

AI workflows contract and expand continuously. During evaluation cycles, a team may need 100 contributors. During refinement cycles, they may need 40. When agentic workflows are being tested, Python engineers may be needed. A static internal team cannot scale in sync with such fluctuations.

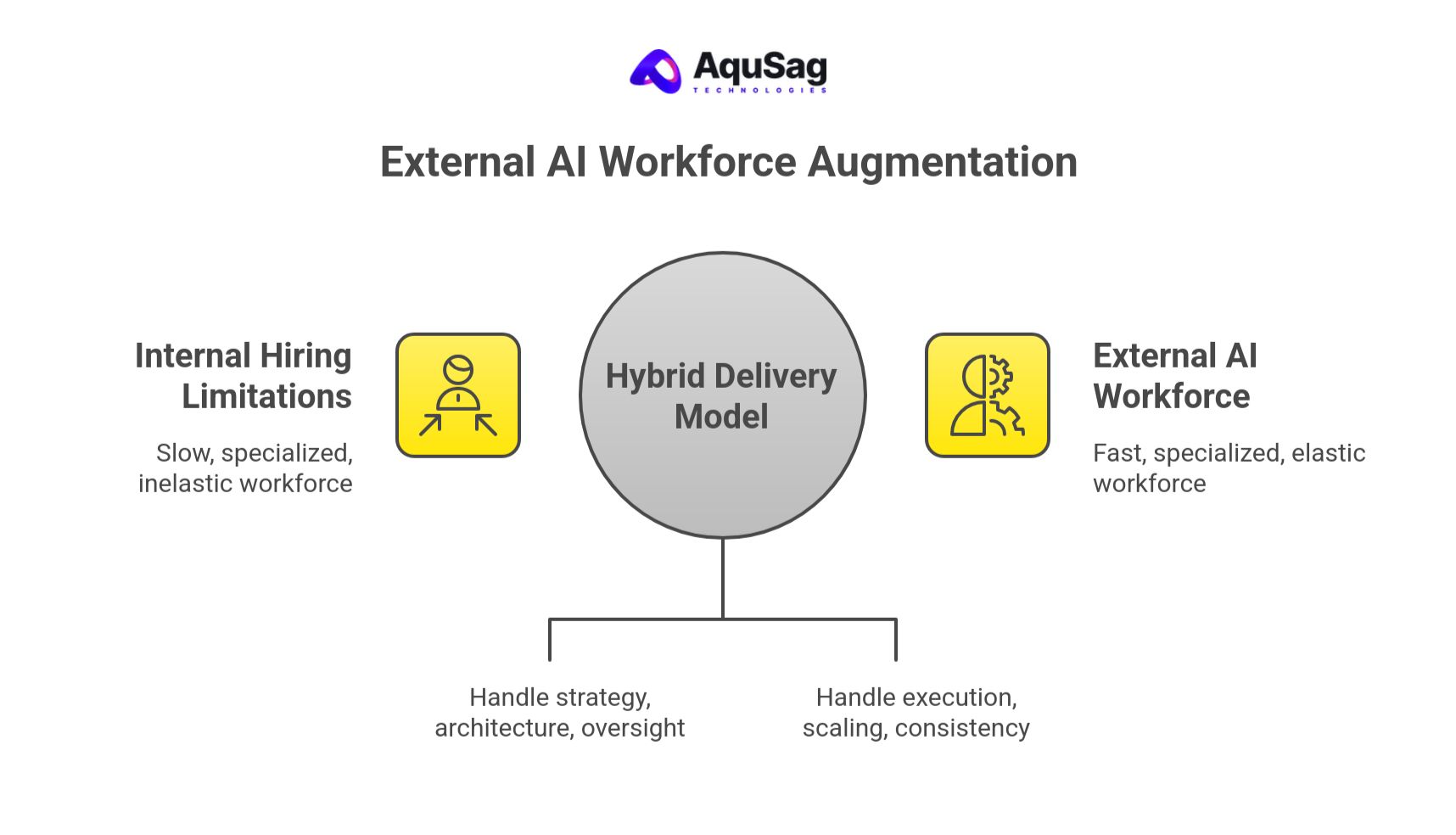

For these reasons, enterprises increasingly adopt a hybrid delivery model: internal teams handle strategy, architecture, and oversight, while external teams handle execution, scaling, and operational consistency.

How can enterprises run RLHF pipelines without having internal RLHF experts?

The key is to partner with a workforce provider that already trains and maintains RLHF-ready contributors. These contributors understand instruction-following frameworks, preference ranking systems, safety guidelines, bias detection, and evaluation rules. They work in controlled workflows with job aids, reference examples, and governance systems that maintain consistency across the entire pipeline.

An enterprise only needs to define the model goals, evaluation rubrics, and expected outputs. The external workforce partner then handles the operational side: training contributors, managing quality, coordinating reviews, and ensuring that the model’s reinforcement loop is populated with high-quality human feedback.

This allows enterprises to launch and maintain RLHF programs without needing internal RLHF specialists.

Can enterprises operate full LLM evaluation programs without hiring evaluators?

Yes,and this is where specialized external teams deliver maximum value.

Well-run evaluation pipelines require contributors who can score model responses across categories such as accuracy, reasoning, coherence, style alignment, format compliance, domain correctness, risk level, safety, hallucination presence, and overall approval. The evaluators must remain consistent across large volumes of evaluations, even as rubrics evolve weekly.

Maintaining such consistency internally is extremely difficult. External evaluation teams, however, are trained specifically for this. They follow structured interpretation guidelines, calibration sessions, review boards, and quality checks that ensure the evaluation data is accurate, reliable, and reproducible.

With this model, enterprises receive complete evaluation outputs without hiring a single internal evaluator.

How can enterprises perform large-scale annotation without building annotation teams?

Data annotation is often the hidden backbone of LLM and GenAI workflows. While enterprises initially underestimate its importance, they eventually discover that annotation determines data quality, which directly determines model performance.

Annotation roles vary widely,structured data labeling, unstructured content interpretation, safety annotation, domain classification, customer intent tagging, document extraction, metadata enrichment, and proprietary taxonomy application.

Maintaining a multidisciplinary internal annotation team is unrealistic. Enterprises instead rely on external teams that maintain continuous annotation pipelines. These contributors are trained on guidelines, calibrated using reference sets, and monitored for accuracy and output consistency.

Enterprises maintain direction and control,but not the operational workload.

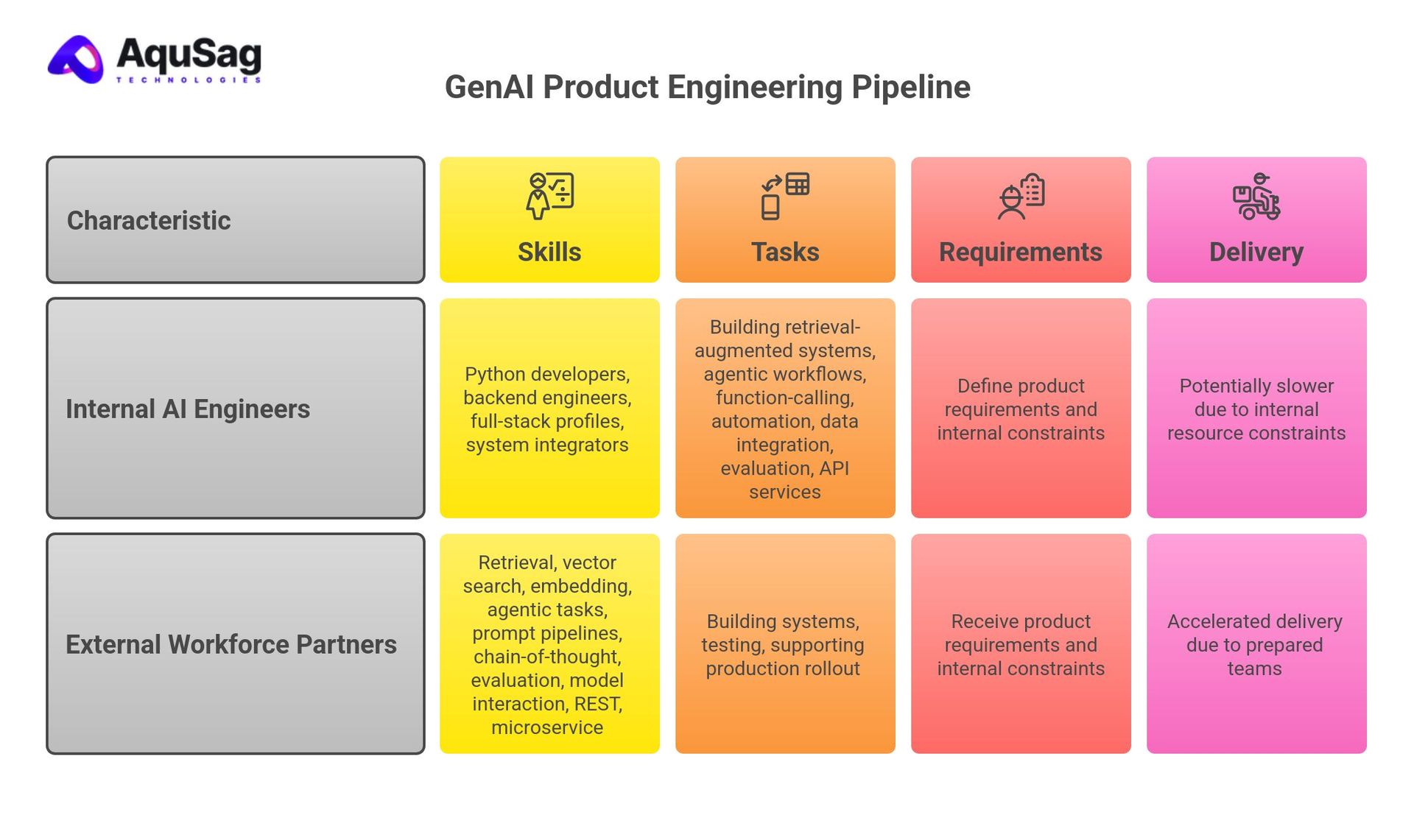

How do enterprises build GenAI product engineering pipelines without internal AI engineers?

Enterprises often need engineering support for building GenAI-powered applications. This includes retrieval-augmented systems, agentic workflows, function-calling capabilities, automation scripts, data integration layers, evaluation dashboards, and API services. These tasks require Python developers, backend engineers, full-stack profiles, and system integrators familiar with modern AI development.

External workforce partners provide developers with experience in:

- Retrieval and vector search

- Embedding orchestration

- Multi-step agentic tasks

- Prompt pipelines

- Chain-of-thought constraints

- Evaluation tooling

- Model interaction workflows

- REST and microservice integration

Enterprises simply define the product requirements and internal constraints; the external engineering team builds the systems, tests them, and supports production rollout.

This accelerates delivery dramatically since teams join fully prepared.

How can enterprises maintain quality across outsourced RLHF, LLM, and annotation pipelines?

Quality is the single most important factor in AI workflows. Unlike traditional software QA, AI quality depends on structured human input and consistent interpretation of guidelines.

A strong external partner maintains quality through:

- Domain-specific training

- Calibration sessions

- Multi-layer review

- Continuous scoring

- Correction loops

- Contributor coaching

- Replacement pipelines

- Automated monitoring tools

Enterprises define the rubrics but do not handle the enforcement. The partner ensures that every contributor follows the rules consistently. This separation of responsibilities keeps the enterprise focused on outcomes rather than micromanaging the operational process.

What internal preparation is needed before an enterprise begins outsourcing AI workflows?

Surprisingly little. Enterprises mainly need clarity on the expected outputs, the structure of evaluation rubrics, data specifications, safety constraints, and the overall goals of the AI project. Once these components are defined, external teams can begin their work.

Internal preparation also includes establishing communication channels, creating escalation paths, and identifying internal owners for different parts of the delivery pipeline. The partner coordinates the rest,recruitment, training, scaling, quality, reporting, and technical alignment.

The enterprise retains strategic control and oversight, while the operational execution shifts externally.

How do enterprises ensure continuity in outsourced AI workflows?

Long-term AI programs require continuity across months or years. A mature partner maintains ready-to-deploy contributors and ensures that transitions are seamless. If contributors are replaced due to performance or availability, the partner retrains new contributors without slowing down delivery.

Enterprises benefit from predictable workflows, consistent output quality, and stable delivery cycles even during periods of change.

Can enterprises run entire GenAI initiatives without building internal AI operations teams?

Increasingly, the answer is yes. Many enterprises adopt a hybrid model where they maintain a small internal strategy team and rely on external teams for execution. This structure is efficient because internal experts focus on architecture, alignment with company objectives, compliance, and product vision, while external teams handle the dynamic workloads,training, evaluation, annotation, and engineering.

This model is now used widely in enterprises that want to move fast without committing to large internal AI departments.

Why external AI workforce models are defining the future of enterprise GenAI delivery

Enterprises want the benefits of LLMs, RLHF, and GenAI, but they do not want the operational burden of building large internal teams. Modern AI workloads require scale, specialization, and speed,none of which fit the structure of traditional hiring. External workforce augmentation solves this by providing trained contributors, rapid deployment, flexible scaling, quality governance, and multidisciplinary support that covers the full AI lifecycle.

This model allows enterprises to adopt AI confidently, experiment freely, and deliver reliably without expanding internal headcount. It removes operational friction, enforces quality, and creates a delivery engine capable of keeping pace with the rapidly evolving AI landscape.

Enterprises that adopt this model now gain a structural advantage. They achieve faster time-to-market, greater cost efficiency, and deeper operational resilience,all without creating complex internal teams.

This is not a temporary workaround; it is becoming the default way enterprises build and scale modern AI systems.

FAQ: Running RLHF, LLM, and GenAI Pipelines Without Internal Teams

1. Can enterprises run RLHF workflows without hiring internal experts?

Yes. A trained external workforce can execute instruction generation, preference ranking, safety review, and reinforcement loops based on the enterprise’s guidelines.

2. What roles are typically outsourced in GenAI delivery?

LLM Trainers, RLHF Annotators, Evaluation Specialists, Data Annotators, Python Developers for AI Services, Backend Engineers, Agentic Workflow Contributors, and Model Validation Specialists.

3. How fast can external AI teams be deployed?

Most workloads can be supported within days, and large cohorts can be activated within a week.

4. Does outsourcing compromise model quality?

No. In fact, structured external QA processes often produce more consistent and reliable outputs than ad hoc internal teams.

5. Is this model suitable for long-term enterprise AI programs?

Yes. Many enterprises rely on external AI workforce partners for ongoing evaluation, continuous annotation, and long-running RLHF cycles.