In the high-stakes race to achieve General Artificial Intelligence, the industry has become obsessed with three variables: compute power, data volume, and architectural efficiency. Silicon Valley spends billions on H100 clusters and petabytes of raw data, yet many of these same organizations overlook the most volatile variable in the entire equation: the human being in the loop.

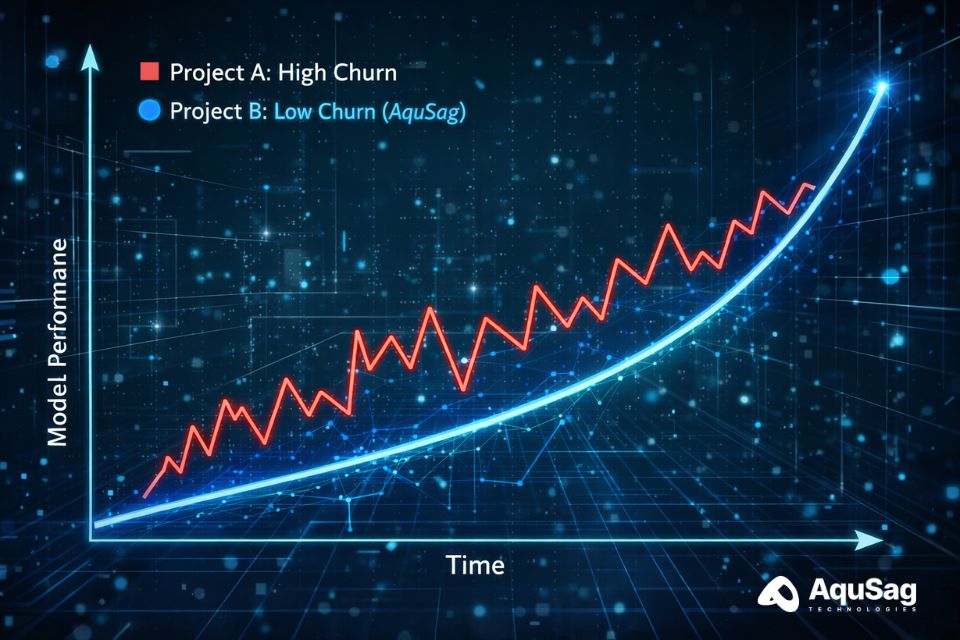

As AI models move from simple pattern recognition to complex reasoning and logic, the quality of human feedback has become the primary differentiator. However, there is a silent killer lurking in the balance sheets of major AI labs and enterprises. It is not a technical bug or a hardware failure. It is talent churn.

When a specialized trainer, such as a legal expert, a medical doctor, or a senior software engineer, leaves a project mid-cycle, they do not just leave a vacancy. They take a piece of the model's potential intelligence with them. In this deep dive, we will explore why stability is the only true moat in the AI training landscape and how the industry must evolve from "gig-based" labeling to "Stability as a Service."

The Mathematics of Model Inconsistency

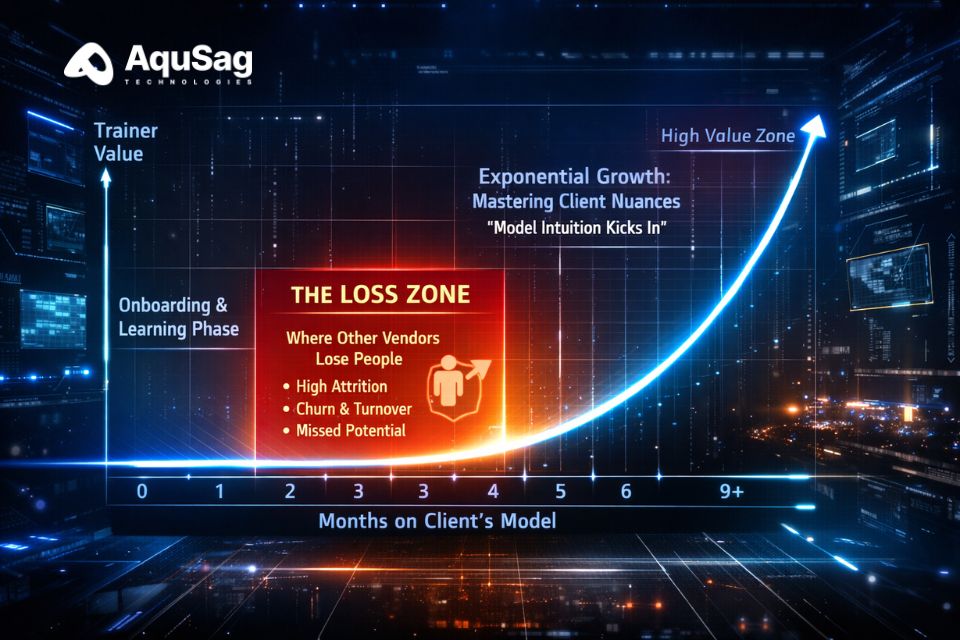

To understand the cost of churn, one must first understand the mechanics of Reinforcement Learning from Human Feedback (RLHF). Unlike traditional data labeling where a worker might identify a "stop sign" in a photo, modern RLHF requires the trainer to evaluate nuance, tone, logical fallacies, and ethical boundaries.

When a new trainer enters a project, there is a "calibration period." Even with the most robust Standard Operating Procedures (SOPs), every human interprets "helpfulness" and "harmlessness" slightly differently. If your team is a revolving door of contractors, your model is essentially being taught by a crowd of strangers who have never met. This leads to inter-annotator disagreement, a technical hurdle that slows down convergence and forces expensive re-runs of training sets.

The Retraining Tax: A Hidden Drain on Capital

Most AI labs calculate the cost of a vendor based on a simple hourly rate. This is a fundamental error. The true cost of a vendor is the Effective Hourly Rate, which includes the time spent by your internal lead engineers to interview, onboard, and retrain the vendor’s staff.

When a vendor has a churn rate of 30% or 40% (which is common in the traditional BPO and marketplace models), your senior engineers are trapped in a perpetual cycle of "Knowledge Transfer." Every time a specialized SME leaves, your internal team must spend 10 to 20 hours bringing a replacement up to speed on the specific "style guide" and edge cases of your model.

If your lead engineers are earning $300,000 a year, their time is your most precious resource. Using them to repeatedly train a vendor’s revolving door of contractors is a catastrophic waste of human capital. Stability in your vendor’s workforce is, quite literally, a refund of your engineering team's time.

The "Contextual Memory" Problem in AI Logic

AI training is not a series of isolated tasks. It is a long-form conversation between the model and the trainers. Over months, trainers develop an intuitive understanding of a model’s specific weaknesses. They know exactly how to "nudge" the model to overcome a persistent logical flaw in Python coding or a specific bias in financial forecasting.

We call this Contextual Memory.

When a "Managed Pod" stays together for a year, they develop a collective intelligence regarding the project. They stop being "labelers" and start being "mentors" to the model. Stability ensures that the feedback loop is consistent, allowing the model to lock in gains rather than oscillating between different human preferences.

If the person teaching the model changes every ninety days, the model is being raised by a village of ghosts. There is no continuity of logic, and the resulting intelligence reflects that fragmentation.

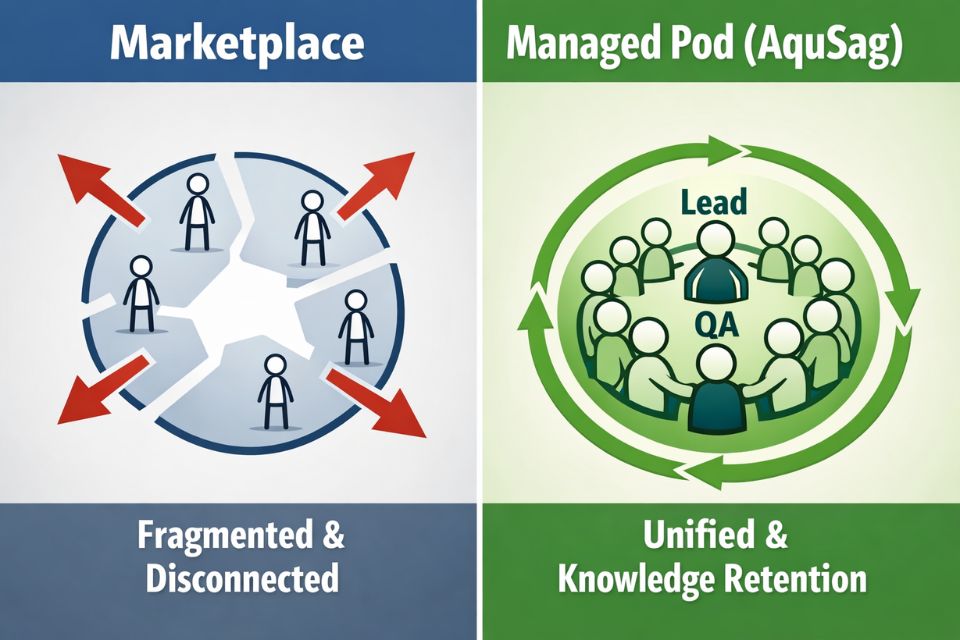

Why Traditional Marketplaces Fail the Stability Test

The current trend in the industry is to use massive talent marketplaces. These platforms provide access to millions of workers, which sounds great for scaling. However, these marketplaces are built on the "gig economy" model. Workers are incentivized to jump from task to task, seeking the highest immediate payout. There is no loyalty, no career path, and no institutional knowledge.

In a marketplace model, the worker's relationship is with the platform, not the project. This fragmentation is the enemy of stability. When a specialized contributor finds a higher-paying task on another platform, they disappear. The AI lab is left with a hole in their data pipeline and no way to recover the lost context.

AquSag’s "Stability as a Service": A New Paradigm

At AquSag Technologies, we realized early on that to hit $1M MRR and beyond, we could not just be a "body shop." We had to be a stability partner. This led to the development of our Stability as a Service (StaaS) model.

Our approach is built on three pillars:

1. The Managed Pod Architecture

We do not send individuals to our clients. We deploy "Pods." Each pod is a self-governing unit consisting of specialized SMEs, a dedicated Team Lead, and an internal QA specialist. This structure creates a "buffer" for the client. If a single person does leave, the Team Lead and the rest of the Pod preserve the context. The client never feels the "bump" of a transition because the Pod as a whole retains the knowledge.

2. Career Pathing for the Top 1%

We don't hire "giggers." We hire engineers, lawyers, and scientists who want to build a career in AI. By providing competitive salaries, benefits, and a clear growth trajectory within AquSag, we achieve a 95% retention rate. Our people stay because they are part of a mission, not just a spreadsheet.

3. Proactive Knowledge Redundancy

Within every AquSag pod, we mandate "Shadowing." Every edge case discovered by one SME is documented in a centralized, project-specific knowledge base. This ensures that the "Project Context" is stored in a system, not just in one person's head.

The Security and Compliance Dimension of Stability

Beyond model performance, there is the critical issue of data security. AI training often involves sensitive IP, proprietary codebases, or "unreleased" model capabilities.

Talent churn is a major security risk. The more people who cycle through your project, the higher the "surface area" for data leaks or IP theft. A revolving door of contractors means your sensitive data is sitting on hundreds of different local machines over the course of a year.

By maintaining a stable, long-term team, we drastically reduce the security risk. Our contractors are vetted, long-term employees who operate under strict, audited security protocols. We don't just protect your data. We protect your future competitive advantage.

High-Fidelity Training Requires High-Fidelity Teams

As we look toward the future of Reinforcement Learning from Human Feedback (RLHF) and Red-Teaming, the complexity will only increase. We are entering an era where AI will be used to generate its own training data, but it will still need humans to act as the ultimate arbiters of truth and logic.

If those human arbiters are constantly changing, the "truth" becomes a moving target.

Stability is not just a HR metric. In the context of AI, stability is a technical requirement for high-fidelity reasoning.

How to Evaluate Your Current Vendor for Stability

If you are currently working with a delivery partner, ask yourself these four questions:

- What is your month-over-month attrition rate for my specific project? (If it is over 5%, you have a problem).

- How much time do my internal leads spend retraining your new hires?

- Do you provide a dedicated Team Lead who owns the project's institutional knowledge?

- What is your strategy for keeping SMEs engaged beyond a 3-month contract?

If they cannot answer these questions, you aren't buying a solution. You are buying a headache that will eventually show up in your model's performance metrics.

Investing in the Foundation

The "Gold Rush" of AI has led to a lot of "shaky" construction. Labs are building massive structures on foundations of sand, fragmented, unstable workforces that cannot support the weight of complex reasoning tasks.

AquSag Technologies was built to be the "Stone Foundation" for the world's leading AI labs. We believe that the only way to build a $1M MRR partnership is to deliver value that compounds over time. That value is rooted in Stability.

We invite you to stop the cycle of churn and start building with a partner that values your model's context as much as you do.

The AquSag Advantage: Let's Build Your Elite Pod

Is talent churn slowing down your model's development? Are your lead engineers spending more time onboarding contractors than shipping code?

AquSag Technologies provides specialized, managed pods of STEM, Legal, and Medical experts with an industry-leading 95% retention rate. We handle the technical "heavy lifting" so you can focus on the architecture of the future.

Don't let your model's intelligence leak out through a revolving door.

Contact AquSag Technologies Today to Deploy Your Stable Pod in 7 Days