In the early evolution of Large Language Models, the goal was simple: predict the next token. If you fed a model enough text, it became remarkably good at mimicking human speech. However, as we have discovered in the transition to 2026, mimicry is not the same as intelligence. To build a model capable of solving a multi-variable calculus problem or drafting a bulletproof legal defense, the model must do more than predict words. It must follow a logical path. This is the essence of Chain-of-Thought (CoT) reasoning.

The challenge facing modern AI Labs is that CoT is only as good as the mind that created the training examples. If a generalist data labeler tries to write a step-by-step logical proof for a physics problem they do not fundamentally understand, they will inevitably skip steps or make logical leaps. When a model is trained on these "broken chains," it learns to "fake" reasoning: it provides a series of steps that look logical but lead to a false conclusion.

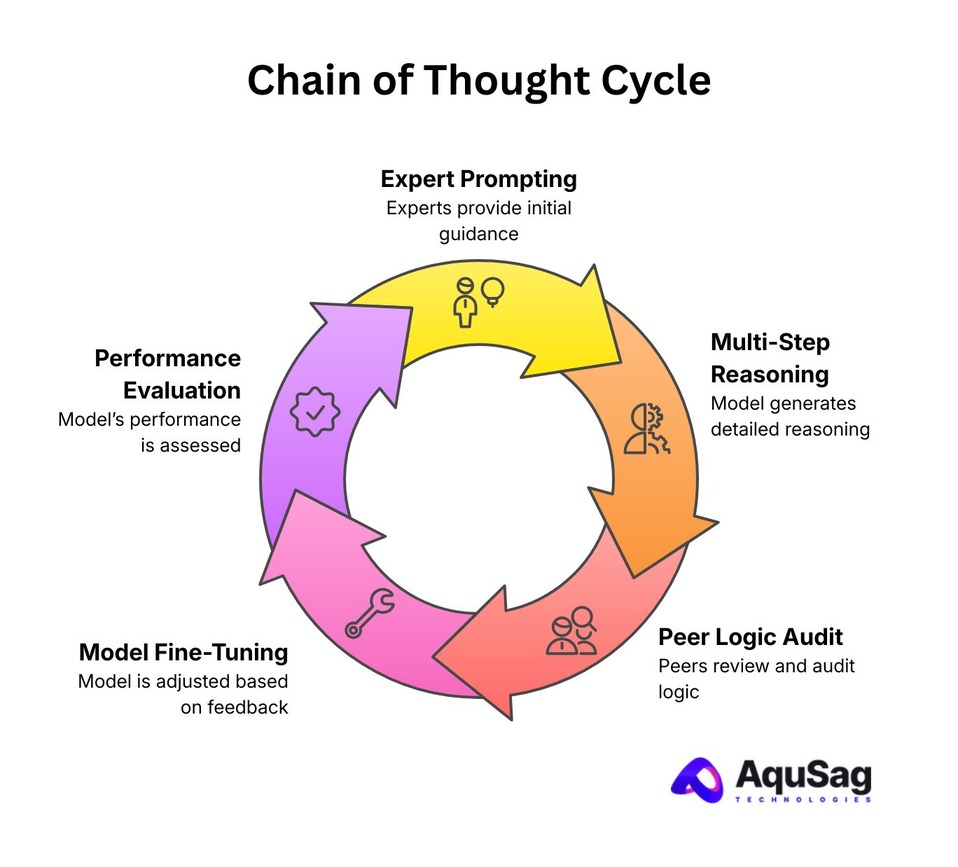

At AquSag Technologies, we treat logic as a deterministic product. We have built a "Logic Factory" where PhDs and STEM researchers don't just provide answers: they architect the cognitive pathways that allow models to think.

The Architecture of a Reasoning Chain

Most training data focuses on the "What"—the final output. Chain-of-Thought focuses on the "How." A high-fidelity reasoning chain consists of several critical components that a generalist simply cannot produce:

1. First-Principle Identification

Before a single step is written, the expert identifies the foundational laws or rules that govern the problem. In finance, this might be a specific accounting standard. In engineering, it might be a law of thermodynamics. This ensures the model's logic is anchored in reality.

2. Atomic Step Deconstruction

A common failure in AI reasoning is "skipping." A model jumps from step A to step D because it has seen the pattern before. Our experts break logic down into "atomic steps." Each step must be a single, verifiable logical transformation. This granularity is what allows a model to generalize its reasoning to new, unseen problems.

3. Explicit Error Correction (Self-Correction Chains)

True intelligence involves recognizing when a path is leading to a dead end. We train models on "Correction Chains" where the expert intentionally explores a common wrong path, explains why it is incorrect, and then pivots back to the right logic. This teaches the model the vital skill of self-correction.

The Subject Matter Gap in Reasoning

You cannot teach a model to "think" about a topic you do not understand. This is where The Subject Matter Gap becomes a literal barrier to model performance.

If you are training a model for medical diagnostics, a generalist might provide a reasoning chain based on common symptoms. A medical doctor, however, will provide a chain based on differential diagnosis, ruling out rare but high-risk conditions through a series of logical filters.

At AquSag, our Managed Pod Model ensures that the person writing the CoT data is at least as qualified as the professional the AI is intended to assist. We don't just hire people who can read a rubric; we hire people who can write the textbook.

Logic is not a style of writing. It is a mathematical structure. If the structure is weak, the model will collapse under the weight of enterprise complexity.

Deterministic Quality in Logic Auditing

How do you audit a thought? Traditional QA methods fail when applied to reasoning. You cannot use a "Majority Vote" to decide if a mathematical proof is valid.

We apply our Deterministic Quality Frameworks to every reasoning chain. Each step in the CoT is treated as a logic gate.

- Validation: Does Step 2 logically follow from Step 1?

- Verification: Is the calculation in Step 3 mathematically perfect?

- Completeness: Is there a hidden assumption in Step 4 that hasn't been explained?

This level of rigor ensures that your model is not just a "fast talker" but a "clear thinker." It significantly reduces the hallucination rate by forcing the model to verify its own work as it generates it.

From Static Data to Dynamic Reasoning Pods

The requirements for CoT data shift as your model evolves. In the early stages of fine-tuning, you might need broad logical structures. As you move toward production, you need "Adversarial Logic", chains designed to handle edge cases and trick questions.

This is where the Elastic Bench becomes your strategic advantage. We can ramp up a pod of specialized logicians for a high-intensity reasoning sprint and then scale back once your model hits its accuracy benchmarks. This allows you to maintain the highest level of cognitive training without the permanent overhead of a massive internal research team.

The Business Value of "High-Logic" Models

Why invest in expert-led CoT? Because in 2026, "Average AI" is a commodity. "Expert AI" is the product.

- User Trust: A model that can explain its work in a logical, step-by-step manner is far more likely to be adopted by professionals.

- Accuracy: Reasoning-focused models consistently outperform pattern-matching models on complex benchmarks like MMLU and MATH.

- Safety: A model that understands logic is easier to align with safety guardrails because it understands the "Why" behind the rules.

Building the Minds of the Future

The next frontier of Artificial Intelligence is not about more parameters: it is about better reasoning. To reach that frontier, your model needs a world-class education.

At AquSag Technologies, we provide the teachers. Our pods of PhDs and specialized researchers are the engine behind the world's most sophisticated reasoning models. We don't just label data: we build logic.

Does Your Model Struggle with Complex Logic?

If your LLM is failing at multi-step problems or technical reasoning, your training data is the likely culprit. Do not settle for "broken chains."

Contact AquSag Technologies today to learn how our Expert CoT Pods can transform your model from a pattern-matcher into a logical powerhouse. Ask about our Logic-Audit pilot program.